Interframe Compression

In our last post, we covered intraframe compression - easy to edit, high quality, and inexpensive to implement. It sounded dreamy. But there are some issues.

Let’s say you want to implement a tape-based HD acquisition format. The world already knows and loves cheap and cheerful miniDV tapes. Why not just put HD on those? Well, HD is more than four times the number of pixels, so we need to run the tape four times faster. Suddenly your 60 minute tape is only good for about 12 minutes. Even worse, you need to engineer motors, write heads, and lots of other bits that work reliably at four times the speed. And you need to hope that those miniDV tapes won’t spontaneously combust. Clearly, this will not do.

Interframe

Interframe compression is based on the assumption that any two sequential frames of video are unlikely to be all that different. A talking head video primarily consists of a moving mouth, and perhaps some blinking eyes. A panning shot primarily consists of the same image, just moving sideways. Why would we go to all the trouble of sending a complete image each time when all we need to do is describe what changed?

That’s where interframe comes along. Interframe compression uses a variety of techniques to encode the changes from one frame to the next. For example, most interframe formats use something called motion estimation and motion prediction. Motion vectors allow the encoder to describe movement within a frame. Let’s take a panning shot for example. As we pan across a scene, some parts of the scene enter one side of the frame, and some parts leave the other side, but the section of the image in the middle just moves laterally. A motion vector tells the codec to take some pixels from one frame, and move them a little bit for the next frame. In doing so, the codec avoids wasting bits sending the same data twice.

A simplified interframe stream would consist of an initial complete image (called an “I-Frame”) followed by a series of frames consisting primarily of motion vectors and partially changed pixels (“P-Frame”). A third type of frame, called a B-Frame is based not only on the frames that have already been passed, but also frames that will occur in the future (more on this later). Finally, another I-Frame closes the sequence. This sequence is called a Group Of Pictures, or GOP.

Interframe compression has been around for decades, but until a few years ago, it was considered a delivery technology. Once you’d finished shooting and editing your video using one of the popular intraframe codecs, you’d compress it with an interframe code to deliver on DVD (MPEG-2), or the web (H.264, VP6, etc). An hour of SD video - 13gigs at DV datarates - could easily compress down to 500 megabytes with a decent interframe codec.

The arrival of HD, with its elevated bitrates, brought interframe encoding into the acquisition and production stage. For most prosumers, their first experiences with interframe acquisition, positive and negative, came with HDV.

The goals with HDV were to squeeze high definition content into DV datarates, maintaining the popular MiniDV tape format. To achieve this, designers turned to the popular MPEG-2 interframe codec. Using a 15-frame GOP (or 12-frame in the case of JVC 720p), HD video was squeezed into the same 25 megabits per second as SD.

Unfortunately, as with many new technologies, HDV wasn’t all roses and sunshine from launch. The tools to ingest and edit the content were, understandably, immature, and issues were often blamed, sometimes justifiably, on the very nature of interframe compression.

One of the first thing producers noticed was an odd lag when they went to capture their footage to computer. You’d click play, hear the camera spool up, but it’d be a second or so before any video appeared. Gone was the instant reaction of DV. The reason for this lag is intrinsic to the structure of a GOP.

A B-frame is based on a frame that will be displayed in the future. Think of a bouncing ball. If we know both where the ball was, and where it will be, we can better predict the path it follows. Because a B-frame is based on both past and future data, we can’t decode the B-Frame until we’ve decoded the P- and potentially I- frames that come after it. So, before your computer can display a single frame of HDV video, it needs to decode a full GOP. There’s additional lag added by most camcorders, which don’t send data down their firewire port until they’ve read an entire GOP from tape.

This is the same reason that, when using a program like ScopeBox with an HDV source, you’ll notice a large amount of lag between movement and the display of that movement on the screen. Users often contact us asking how they can reduce that lag, and we have to explain that it’s a core component of the format.

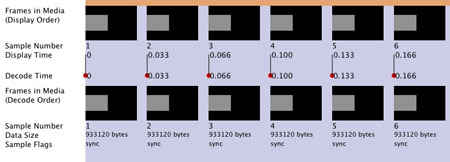

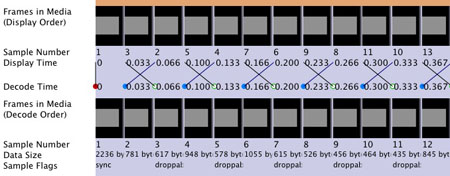

We can see this visually using a couple screen grabs from an application which displays both the decode and display orders for a video stream. The first image shows an uncompressed file, in which frames are decoded in the same order they’re displayed. The second image shows an H.264 file, in which the P-Frames need to be decoded before the B-Frames that precede them. B-Frames are indicated by the “droppable” flag below them.

The issues surrounding GOPs lead to another early limitation with HDV - some editing software could only cut at the boundary between two GOPs. Eventually, editing applications were able to work around this by decompressing whole GOPs internally, and just hiding the extra frames from the user. There are performance implications of this process, though newer machines alleviate much of this.

There’s one final issue that sends shivers down the spine of any editor - generation loss. Remember tape-to-tape linear editing in your betacam suite? No? Sigh. Anyways, the same generation loss that you worked to avoid 20 years ago is back with interframe post production. If you were to take some HDV footage, cut out a chunk in the middle, and then print it back to an HDV tape, the entire stream will be decompressed to a raw intraframe stream, then re-encoded into HDV. Because you’ve made an edit, the GOP boundaries will fall at different points, and generation loss can occur. While this type of generation loss is technically possible with any compressed workflow (rarely do encoders and decoders have one-to-one parity) it’s far more likely with an interframe workflow.

That’s a brief (well, kind of brief) introduction to the differences between interframe and intraframe compression. In an ideal world made of infinite money and infinite bandwidth, we’d be exclusively intraframe. But until we’re all shooting our home movies on ARRI Alexas, interframe is here to stay.